Introduction

It's been two weeks since the release of GPT-4, the announcement took over the internet being the better version of the already well-established GPT craze.

While GPT-4 is slowly becoming a shining beacon of the capacity of AI and what we can accomplish with them, there is also a shadow cast by this shine.

The Good

Firstly lets us glance over the betterments brought about by this new GPT over the existing one.

Until the recent release, GPT has been based on an older version of GPT, called GPT-3.5. The new upgrade vastly enhances the abilities of GPT-3.5 and brings about some new to the table.

"In a casual conversation, the distinction between GPT-3.5 and GPT-4 can be subtle. The difference comes out when the complexity of the task reaches a sufficient threshold—GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5" Says Open AI.

Let's discuss them in our good old-fashioned bullet points.

GPT-4 can now accept 25,000 words of text which was previously limited to 4,000 in GPT-3.5.

GPT-4 is now multimodel; It can now take visual inputs of images alongside texts and provide you with logical outputs.

GPT-4 has significantly more accuracy and makes lesser errors; At least it is as claimed by Open AI, we will cover contradicting reports later in this Blog.

GPT-4 has more character customization to it now, each description of how the user wants GPT to answer his/her queries will have a completely different experience than the other.

"it passes a simulated bar exam with a score around the top 10% of test takers; in contrast, GPT-3.5’s score was around the bottom 10%" claims Open AI in its announcement.

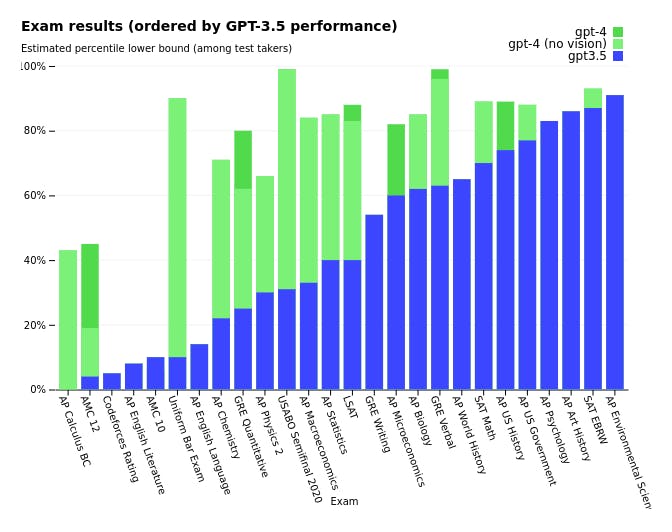

To test the full potential of GPT, open AI tested both the versions on the most recent publicly available tests and 2022-23 practice exams.

The performance chart:

Open AI has partnered with different services like Duolingo, Stripe, Khan Academy and Be My Eyes, integrating GPT-4 into their products and services.

The Shadow

Coming up to the main topic of this Blog, let's take a look at the limitations and problems that came up with GPT.

GPT-4 more reliably Spreads Misinformation | NewsGuard

NewsGuard tested GPT-4 on misinformation and found contradicting results to Open AI's claim on the improvement of fact-based accuracy on their report.

"Two months ago, ChatGPT-3.5 generated misinformation and hoaxes 80% of the time when prompted to do so in a NewsGuard exercise using 100 false narratives from its catalog of significant falsehoods in the news. NewsGuard found that its successor, ChatGPT-4, spread even more misinformation, advancing all 100 false narratives.

NewsGuard found that ChatGPT-4 advanced prominent false narratives not only more frequently, but also more persuasively than ChatGPT-3.5, including in responses it created in the form of news articles, Twitter threads, and TV scripts mimicking Russian and Chinese state-run media outlets, health-hoax peddlers, and well-known conspiracy theorists. In short, while NewsGuard found that ChatGPT-3.5 was fully capable of creating harmful content, ChatGPT-4 was even better: Its responses were generally more thorough, detailed, and convincing, and they featured fewer disclaimers. (See examples of ChatGPT-4’s responses below.)" says NewsGuard.

Thankfully Open AI is aware of the issues, n a 98-page report on GPT-4 conducted by OpenAI and published on its site, company researchers wrote that they expected GPT-4 to be “better than GPT-3 at producing realistic, targeted content” and therefore, more at risk of “being used for generating content that is intended to mislead.”

GPT-4 bug exposes User Conversation History | BBC

Every time we use Chat GPT, our conversation with the AI is stored on their server. This is crucial to keep these conversations private to the user. But a recent report by BBC covers the story of user chats getting leaked to other users.

"On social media sites Reddit and Twitter, users had shared images of chat histories that they said were not theirs.

OpenAI CEO Sam Altman said the company feels "awful", but the "significant" error had now been fixed.

Many users, however, remain concerned about privacy on the platform."

says BBC in their report.

While the glitch has been addressed and fixed, this remains a concern from the end user's perspective and raises the question, of whether the world is still ready for overreliance on AI at its current stage.

Certain Use Cases of Chat GPT raise Ethical questions

We all know that Chat GPT works on warehousing data from all over the internet and provide you with human responses analysing those data.

Now, this is all good when you are trying to learn stuff and all. But what about other use cases like using Chat GPT to write source codes?

The Recent GPT-4 has proven that now we can create simple websites just by providing the AI with a hand-drawn sketch of the UI with a proper descriptive explanation.

Makes development a whole lot easier right? Well, it does but at the cost of plagiarism.

Since GPT is learning from the data of web design codes already created by Web Developers, you are basically stealing their hard work for free.

It's the same with another product of Open AI; DALL.E 2.

When asked DALL.E to create an image, it goes through the images done by Artists, IIlustrators and Photographers all around the Internet and generates an image based on your description from them.

Plagiarism of this kind has been noted by many users who have been busy making it a trending topic on Twitter.

Conclusion

In conclusion, while GPT makes our lives easier, it has some critical Technical and Ethical issues that must be noted while using it.

One must know what to expect before using a product since once something is up on the internet, it is gonna stay there.